Measuring GANs Objectively

Markus Liedl, 13th January 2018

TL;DR. I'm describing a simple and pragmatic approach to measure the quality of the generator in a GAN. I'm simply counting how many minibatches a fresh, randomly initialized discriminator needs to make good predictions!

This morning a nice idea came to my mind. People spent some effort to objectively measure the quality of the generator in generative adversarial networks. The approach I'm describing here is refreshingly simple:

I'm training a new discriminator for every test and count how many minibatches of real and fake images it needs till it can confidently decide between the two.

Intuitivly the new discriminator needs fewer steps if the generated data is far from the real data and it needs more steps if the generated data is close to the real data.

As long as the generator gets better and better, the real and the generated data become more similar and more difficult to distinguish. It's more effort to train a classifier when the data in both classes is similar. I'm suggesting to count the number of minibatches needed to train a new, somewhat good discriminator.

This count is quite noisy, so I'm doing it ten times.

Below I'm calling this new discriminator the measure discriminator.

Here is a DCGAN run on CELEBA with batchsize 128 and 1024 latent codes:

The x axis is GAN iterations divided by 100. The y axis is the number of measure discriminator iterations.

Here the same net and data with batchsize 16 and 128 latent codes:

The x axis is GAN iterations divided by 500. The y axis is again the number of measure discriminator iterations. Note the number of iterations needed is larger, even in the beginning of the training. That's due to the smaller batchsize that is also used for the measurements.

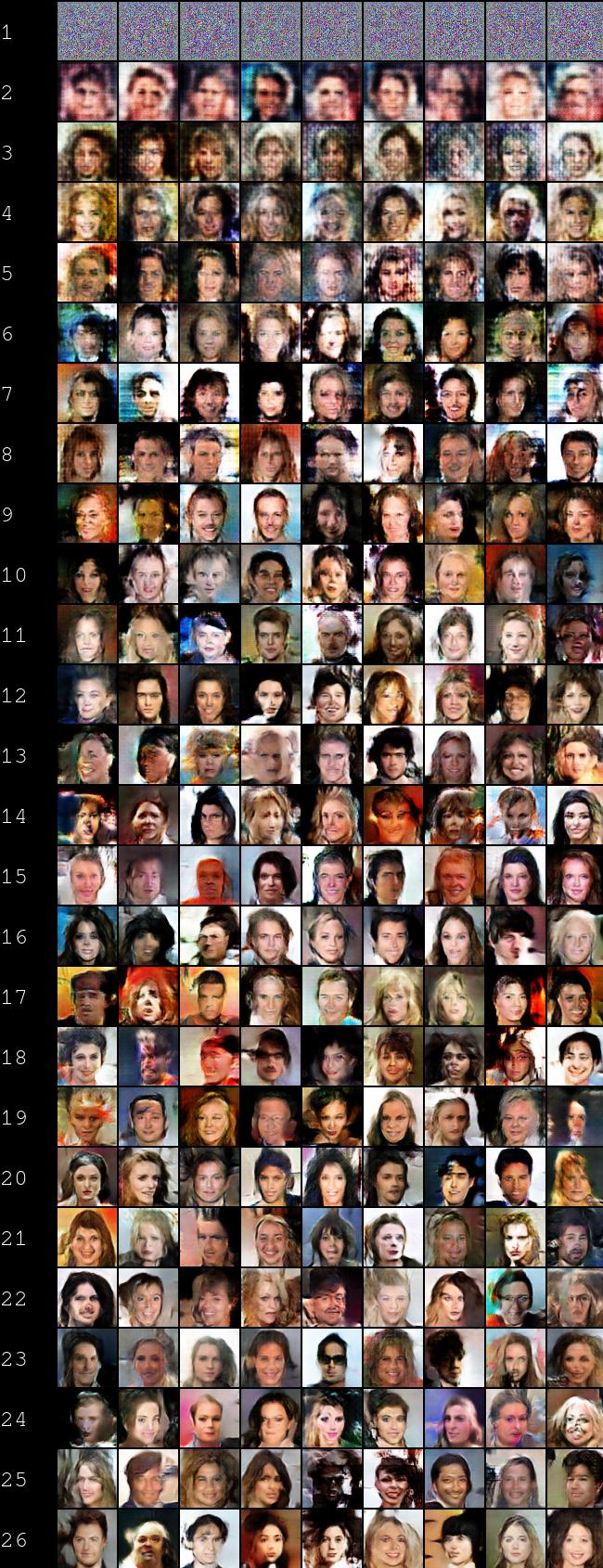

Another run with batchsize 64 and 128 latent codes:

X axis is GAN iterations divided by 500. Below are generated samples from that third run. The numbers in the charts X axis correspond to the rows below, so you can check for yourself if you think that there is any correlation between image quality and the value I'm measuring.

Advantages

- self contained; no obscure hidden activations of pretrained models are needed

- it's reasonable to expect this way of testing can detect missing modes. If all modes of the real images are present in the generated images the measure discriminator has a difficult job to do.

- can detect generators that make no progress anymore

- comparing different GAN hyperparameters, generator architectures or discriminator architectures is possible by using the same measure discriminator architecture and hyperparameters!

- No need to limit the discriminators capacity like Wasserstein GAN does or regularize it in some special way.

- Is applicable to non-adversarial generative modeling like GLO (Generative Latent Optimization) (but GLO isn't suffering from missing modes anyway).

Disadvantages

- testing gets slower and slower; a perfect generator would fool the measure discriminator forever!

- We wanted to measure how nice the generated images are. That's not really what I'm doing here.

Details

The measure discriminators are trained in the same way as the GAN discriminator. The minibatches consist of randomly selected real images, and freshly generated fake images.

Using Adam to optimize the generator, discriminator and measure discriminator; LeakyRelu 0.4 and ordinary Batch Normalization everywhere, all convolutions have kernel width 4.

The criterion to stop the measure discriminator is: Mean prediction for the real images in one minibatch above 0.9 and mean prediction for the fake images below 0.1.

I'm just validating the idea here. All training runs were quite short. The main point is that starting up a new discriminator is more and more effort and that it's reasonable to expect that this effort corresponds to generator quality.

Further

- Validate that this score here really stops increasing once a generator operates at its capacity limit.

- Validate that my guesses about detecting missing modes are correct.

- One could use differently parameterized measure discriminators to get different views on the problem.

- "It's more effort to train a classifier when the data in both classes is similar." Is this only common sense? Are there studies? Hmm, can there be proofs? Is "similar" well defined? Is it helpful to speculate how much information the measure discriminator can absorb in every training step?

14th January

I'm currently regenerating images for training the ten measure discriminators. That could be optimized to generate only one set of images that is used for the different discriminators. Every one of the ten measure discriminators starts with random weights. So there is no overfitting possible.

Another advantage is that this kind of testing allows quite concrete statements about generator quality, like "This measure discriminator needed to see 3264 fake and real images before being able to differentiate between them." (But the batchsize is also important) Or the other way: "This generator could fool a new discriminator for 51 Adam steps"

Deep Learning

Follow me on twitter.com/markusliedl

I'm offering deep learning trainings and workshops in the Munich area.